Welcome

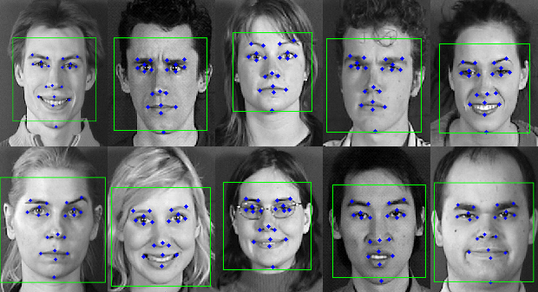

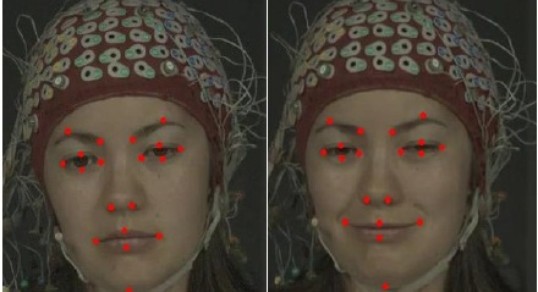

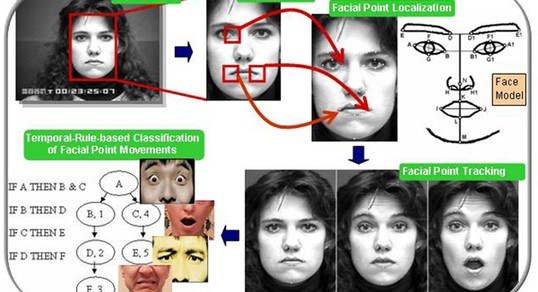

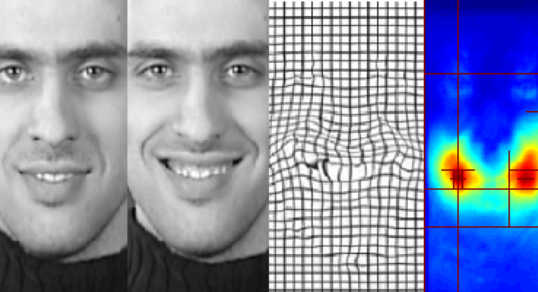

The core expertise of the iBUG group is the machine analysis of human behaviour in space and time including face analysis, body gesture analysis, visual, audio, and multimodal analysis of human behaviour, and biometrics analysis. Application areas in which the group is working are face analysis, body gesture analysis, audio and visual human behaviour analysis, biometrics and behaviometrics, and HCI.

Latest News

CVPR 2023: 5th Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

We are organizing a Workshop and a Competition at IEEE Computer Vision and Pattern Recognition Conference (CVPR), 2023. The competition is split into 4 Challenges: i) Valence-Arousal estimation, ii) Expression Classification, iii) Action Units Detection, iv) Emotional Reaction Intensity Estimation. More details can be found here. The competition has started!

ECCV 2022: 4th Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

We are organizing a Workshop and a Competition at European Conference on Computer Vision (ECCV), 2022. The competition is split into 2 Challenges: i) multi-task learning and ii) learning from synthetic data. The Competition is based on Aff-Wild2. More details can be found here. The competition has started!

CVPR 2022: 3rd Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

We are organizing a Workshop and a Competition at IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2022. The competition is split into 4 Challenges: i) valence-arousal estimation, ii) expression classification, iii) action units detection, iv) multi-task learning. The Competition is based on Aff-Wild2 that is annotated for all these behavior tasks. More details can be found here. The competition has started!

ICCV-2021: 2nd Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)

We are organizing a Workshop and a Competition at International Conference on Computer Vision (ICCV) 2021. The competition is split into 3 Challenges-Tracks: i) valence-arousal estimation, ii) 7 basic expression classification, iii) 17 action units detection. The Competition is based on Aff-Wild2 that is annotated for all these behavior tasks. More details can be found here. The competition has started!

IBUG Chair interview for ITV News at 22:00 (aired on 21-DEC-16)

The interview of Prof. Maja Pantic can be found here.

Latest Publications

Deep Neural Network Augmentation: Generating Faces for Affect Analysis

D. Kollias, S. Cheng, E. Ververas, I. Kotsia, S. Zafeiriou. International Journal of Computer Vision. 2020.

author = {I. Kotsia and S. Zafeiriou and S. Cheng and E. Ververas and D. Kollias},

journal = {International Journal of Computer Vision},

title = {Deep Neural Network Augmentation: Generating Faces for Affect Analysis},

year = {2020},

}

Expression, Affect, Action Unit Recognition: Aff-Wild2, Multi-Task Learning and ArcFace

D. Kollias, S. Zafeiriou. British Machine Vision Conference, Cardiff. September 2019.

author = {D. Kollias and S. Zafeiriou},

booktitle = {British Machine Vision Conference, Cardiff},

month = {September},

title = {Expression, Affect, Action Unit Recognition: Aff-Wild2, Multi-Task Learning and ArcFace},

year = {2019},

}

Realistic Speech-Driven Facial Animation with GANs

K. Vougioukas, S. Petridis, M. Pantic. International Journal of Computer Vision. 2019.

author = {K. Vougioukas and S. Petridis and M. Pantic},

journal = {International Journal of Computer Vision},

title = {Realistic Speech-Driven Facial Animation with GANs},

year = {2019},

}